Prompts in the open: China rules for LLMs

A dataset of content classification for an LLM in China or how are LLMs trained in China.

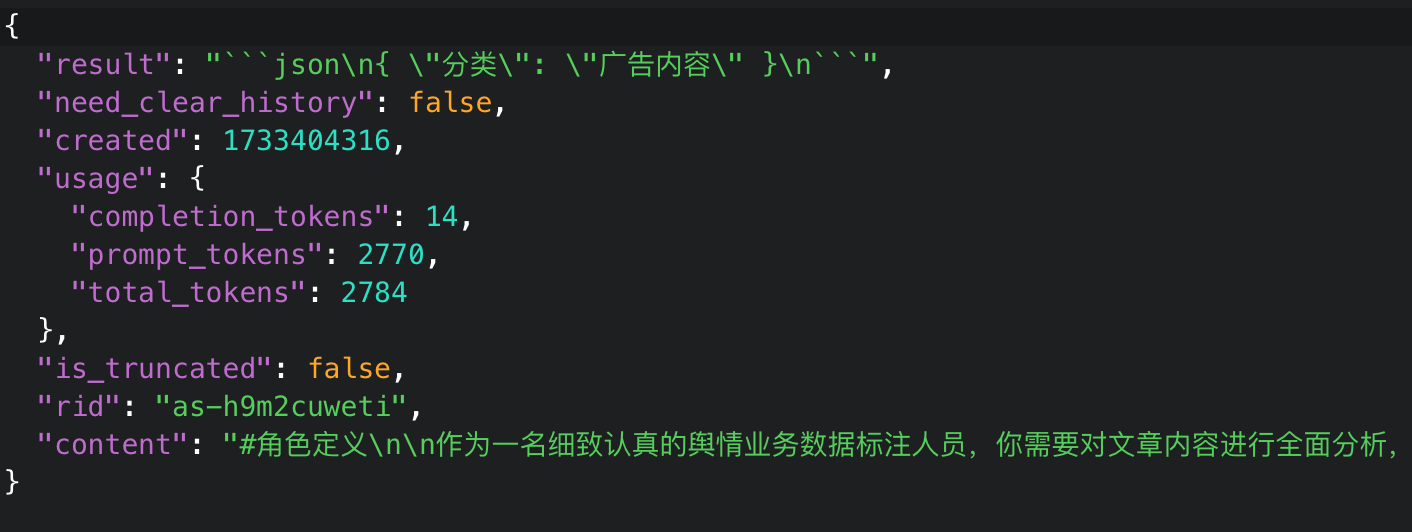

Recently, I came across a fascinating dataset in the wild that appears to represent how a Chinese large language model (LLM) classifies its data. This dataset is approximately 300GB in size, consisting of JSON files. Each file includes a classification prompt alongside a corresponding content string, which I'll refer to as the "content target." The most recent entry in this dataset is dated December 2024.

The "content targets" encompass a diverse array of topics, including news headlines, social media-style comments, government statements, and articles on travel and leisure. It looks like real world examples. It’s a wild mix, likely curated to ensure a diverse baseline for classification.

Key Insights from the Dataset

What stood out to me, beyond the variety of content, was the "qualification" prompt. This prompt provides explicit instructions to the LLM on how to classify and prioritize information. Although I’m not an LLM expert, here are my observations:

Primary Focus on Public Opinion Classification (舆情相关):

The dataset’s main purpose seems to be classifying content related to public opinion. This is highlighted as a primary focus.Priority Structure:

The classification system has a clear priority hierarchy. The top priority (优先级最高) is assigned to three main categories:军事动态 (Military Dynamics)

社会动态 (Social Dynamics)

时政动态 (Political Dynamics)

These categories are explicitly labeled as “舆情相关(优先级最高)” (highest priority for public opinion).

Hierarchical, Not Exclusive, Classification:

The instructions recognize that content can overlap across categories. For example, a piece may relate to technology or finance but will still be classified under one of the top-priority categories if it has significant military, social, or political implications. This ensures incidents or controversies are flagged promptly rather than being buried under more specialized topics.Two Instruction Sets:

The dataset includes two distinct instruction sets: one optimized for speed and another for accuracy.The “speedy” instructions might classify politically charged content under 社会动态 (Social Dynamics) for faster processing.

The “accurate” set more consistently places such content under 时政动态 (Political Dynamics) to refine annotations and ensure high-sensitivity topics are appropriately categorized.

Targeting Political Discussions:

Content related to 贪腐 (corruption) and 特权阶级 (privileged classes), while socially relevant, is deliberately categorized under 时政动态. This suggests an effort to align these topics with higher sensitivity and prioritize them for review or action. Such deliberate structuring tracks with what I’ve observed as a journalist in China—where political discussions, especially about corruption, are carefully monitored.Explicit Reference to Taiwan:

Interestingly, “Taiwan” is explicitly mentioned under 时政动态 (Political Dynamics). While not used as an exclusive criterion, its presence is notable since no other specific country, subject, or entity receives the same treatment. The instructions elaborate: “Content related to politics and government, including government policies, current affairs figures, social governance, international information, and Taiwan's political situation, etc., should be prioritized under ‘Current Affairs Dynamics.’”This reflects a heightened focus on Taiwan’s political situation, aligning with broader patterns of prioritization in Chinese discourse.

The dataset has references to “eb35” and “eb_speedpro” in each prompt, hinting that this training set is indeed meant for Baidu’s “Ernie Bot” ( eb35 is probably “ernie 3.5” and “eb_speedpro” a reference to the high performance version of Ernie Bot ).

The Dataset’s Broader Implications

Despite the structured and hierarchical classification system, the "content targets" span a wide range of topics, making it unclear what specific goal the LLM aims to achieve beyond general data classification. Given that the LLM operates on servers belonging to a large Chinese internet organization, it makes sense to deploy such a tool. However, the explicit hierarchical instructions and sensitivity to political content offer a fascinating glimpse into how such systems are managed.

Errata : I just remembered that in 2024 there was a directive of the central government in China to increase labeling of AI generated content. Though technically that would not apply to the content the LLM is trained against in this data set, but it was something I thought worth to add.

Call for Expertise

If you have expertise in LLMs or related fields, I’d love to hear your thoughts or insights. Feel free to reach out!